parPE example: steadystate model - model simulation, optimization, analysis¶

This notebooks demonstrates basic use of parPE for parameter estimation.

Prerequisites¶

This notebook requires:

a successful parPE with CMake options

BUILD_EXAMPLES=ON(default).an installation of the parPE Python package (

${PARPE_SOURCE_DIR}/python/) in the Python environment in which this notebook is run (ideally thebuild/venv/virtual environment)

This notebook assumes that this file is used from its default location and that parPE was built inside ${PARPE_SOURCE_DIR}/build/. If this is not the case, adapt parpe_source_root and parpe_build_root in the following block correspondingly.

[26]:

import amici

import os

import sys

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import h5py

from importlib import reload

import parpe

# set paths

parpe_source_root = os.path.abspath('../../../')

parpe_build_root = os.path.join(parpe_source_root, 'build')

model_source_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/steadystate_scaled-prefix/src/steadystate_scaled/model_steadystate_scaled'

example_binary_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/'

example_data_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/steadystate_scaled-prefix/src/steadystate_scaled'

optimization_options_py = f'{parpe_source_root}/misc/optimizationOptions.py'

# MPI launcher and options

mpiexec = "mpiexec -n 4 --allow-run-as-root --oversubscribe"

[27]:

# rebuild example

!cd {parpe_build_root} && make

-- Found Git: /usr/bin/git (found version "2.25.1")

-- Building version parPE-v0.4.3-37-g5bf1-dirty

[ 0%] Built target get_version

[ 10%] Built target parpecommon

[ 21%] Built target parpeoptimization

[ 25%] Built target parpeloadbalancer

[ 37%] Built target parpeamici

[ 39%] Built target parpe

[ 45%] Built target unittests_common

[ 50%] Built target unittests_loadbalancer

[ 58%] Built target unittests_optimization

Setting up virtual environment...

[ 58%] Built target setup_venv

[ 59%] Creating test data using hierarchicalOptimizationTest.py

...

----------------------------------------------------------------------

Ran 3 tests in 0.000s

OK

[ 59%] Built target prepare_test_hierarchical_optimization

[ 67%] Built target unittests_amici

[ 69%] Built target example_loadbalancer

[ 81%] Built target steadystate_scaled

[ 84%] Built target example_steadystate

[ 88%] Built target example_steadystate_multi

[ 92%] Built target example_steadystate_multi_simulator

[100%] Built target test_steadystate

[28]:

# run make test to generated all output files required below

!cd {parpe_build_root} && make test

Running tests...

Test project /home/dweindl/src/parPE_2/build

Start 1: testingMisc.testTenToMinusInf

1/80 Test #1: testingMisc.testTenToMinusInf .................................................. Passed 0.00 sec

Start 2: testingMisc.testWithinTolerance

2/80 Test #2: testingMisc.testWithinTolerance ................................................ Passed 0.00 sec

Start 3: testingMisc.testCheckEqualArray

3/80 Test #3: testingMisc.testCheckEqualArray ................................................ Passed 0.00 sec

Start 4: testingMisc.testRandInt

4/80 Test #4: testingMisc.testRandInt ........................................................ Passed 0.01 sec

Start 5: commonMisc.testBacktrace

5/80 Test #5: commonMisc.testBacktrace ....................................................... Passed 0.00 sec

Start 6: commonMisc.testFilexists

6/80 Test #6: commonMisc.testFilexists ....................................................... Passed 0.00 sec

Start 7: commonMisc.testCreateDirectoryIfNotExists

7/80 Test #7: commonMisc.testCreateDirectoryIfNotExists ...................................... Passed 0.00 sec

Start 8: commonMisc.testRecursiveMkpath

8/80 Test #8: commonMisc.testRecursiveMkpath ................................................. Passed 0.00 sec

Start 9: commonMisc.testRandDouble

9/80 Test #9: commonMisc.testRandDouble ...................................................... Passed 0.01 sec

Start 10: commonMisc.testFillArrayRandomDoubleSameInterval

10/80 Test #10: commonMisc.testFillArrayRandomDoubleSameInterval ............................... Passed 0.00 sec

Start 11: commonMisc.testFillArrayRandomDoubleIndividualInterval

11/80 Test #11: commonMisc.testFillArrayRandomDoubleIndividualInterval ......................... Passed 0.01 sec

Start 12: commonMisc.testMpi

12/80 Test #12: commonMisc.testMpi ............................................................. Passed 0.30 sec

Start 13: commonMisc.runInParallelAndWaitForFinish

13/80 Test #13: commonMisc.runInParallelAndWaitForFinish ....................................... Passed 0.01 sec

Start 14: commonMisc.strFormatCurrentLocaltime

14/80 Test #14: commonMisc.strFormatCurrentLocaltime ........................................... Passed 0.00 sec

Start 15: logging.printDebugInfoAndWait

15/80 Test #15: logging.printDebugInfoAndWait .................................................. Passed 0.01 sec

Start 16: logging.misc

16/80 Test #16: logging.misc ................................................................... Passed 0.00 sec

Start 17: logging.printMPIInfo

17/80 Test #17: logging.printMPIInfo ........................................................... Passed 0.00 sec

Start 18: logging.logProcessStats

18/80 Test #18: logging.logProcessStats ........................................................ Passed 0.01 sec

Start 19: costFunction.mseZero

19/80 Test #19: costFunction.mseZero ........................................................... Passed 0.01 sec

Start 20: costFunction.mseNonzero

20/80 Test #20: costFunction.mseNonzero ........................................................ Passed 0.01 sec

Start 21: costFunction.linearModel

21/80 Test #21: costFunction.linearModel ....................................................... Passed 0.00 sec

Start 22: costFunction.linearModel2

22/80 Test #22: costFunction.linearModel2 ...................................................... Passed 0.01 sec

Start 23: costFunction.linearModel3

23/80 Test #23: costFunction.linearModel3 ...................................................... Passed 0.01 sec

Start 24: hdf5Misc.testOpenExistingFileNoOverwrite

24/80 Test #24: hdf5Misc.testOpenExistingFileNoOverwrite ....................................... Passed 0.01 sec

Start 25: hdf5Misc.testOpenExistingFileOverwrite

25/80 Test #25: hdf5Misc.testOpenExistingFileOverwrite ......................................... Passed 0.01 sec

Start 26: hdf5Misc.testMutexGetLock

26/80 Test #26: hdf5Misc.testMutexGetLock ...................................................... Passed 0.01 sec

Start 27: hdf5Misc.testErrorStackWalker

27/80 Test #27: hdf5Misc.testErrorStackWalker .................................................. Passed 0.01 sec

Start 28: hdf5Misc.testCreateGroup

28/80 Test #28: hdf5Misc.testCreateGroup ....................................................... Passed 0.01 sec

Start 29: hdf5Misc.testCreateExistingGroup

29/80 Test #29: hdf5Misc.testCreateExistingGroup ............................................... Passed 0.01 sec

Start 30: hdf5Misc.testEnsureGroupExists

30/80 Test #30: hdf5Misc.testEnsureGroupExists ................................................. Passed 0.01 sec

Start 31: hdf5Misc.testStringAttribute

31/80 Test #31: hdf5Misc.testStringAttribute ................................................... Passed 0.01 sec

Start 32: hdf5Misc.testDatasetDimensions

32/80 Test #32: hdf5Misc.testDatasetDimensions ................................................. Passed 0.01 sec

Start 33: unittests_loadbalancer

33/80 Test #33: unittests_loadbalancer ......................................................... Passed 0.00 sec

Start 34: multiStartOptimization.testMultiStartOptimization

34/80 Test #34: multiStartOptimization.testMultiStartOptimization .............................. Passed 2.20 sec

Start 35: minibatchOptimization.getBatches

35/80 Test #35: minibatchOptimization.getBatches ............................................... Passed 0.01 sec

Start 36: minibatchOptimization.updateParameters

36/80 Test #36: minibatchOptimization.updateParameters ......................................... Passed 0.01 sec

Start 37: minibatchOptimizationLinearModel.testCostWithTrueParametersIsZeroIndivdually

37/80 Test #37: minibatchOptimizationLinearModel.testCostWithTrueParametersIsZeroIndivdually ... Passed 0.01 sec

Start 38: minibatchOptimizationLinearModel.testCostWithTrueParametersIsZeroFull

38/80 Test #38: minibatchOptimizationLinearModel.testCostWithTrueParametersIsZeroFull .......... Passed 0.01 sec

Start 39: minibatchOptimizationLinearModel.testMinibatchSucceedFromOptimum

39/80 Test #39: minibatchOptimizationLinearModel.testMinibatchSucceedFromOptimum ............... Passed 0.01 sec

Start 40: minibatchOptimizationLinearModel.linearModelCheckCostGradient

40/80 Test #40: minibatchOptimizationLinearModel.linearModelCheckCostGradient .................. Passed 0.01 sec

Start 41: minibatchOptimizationLinearModel.linearModelTestBatchOptimizerSucceeds

41/80 Test #41: minibatchOptimizationLinearModel.linearModelTestBatchOptimizerSucceeds ......... Passed 0.05 sec

Start 42: minibatchOptimizationLinearModel.linearModel

42/80 Test #42: minibatchOptimizationLinearModel.linearModel ................................... Passed 0.02 sec

Start 43: optimizationResultWriter.testResultWriter

43/80 Test #43: optimizationResultWriter.testResultWriter ...................................... Passed 0.02 sec

Start 44: optimizationOptions.setGetOptionStr

44/80 Test #44: optimizationOptions.setGetOptionStr ............................................ Passed 0.01 sec

Start 45: optimizationOptions.setGetOptionInt

45/80 Test #45: optimizationOptions.setGetOptionInt ............................................ Passed 0.01 sec

Start 46: optimizationOptions.setGetOptionDouble

46/80 Test #46: optimizationOptions.setGetOptionDouble ......................................... Passed 0.01 sec

Start 47: optimizationOptions.getNonExistingOption

47/80 Test #47: optimizationOptions.getNonExistingOption ....................................... Passed 0.01 sec

Start 48: optimizationOptions.setIpOptOptions

48/80 Test #48: optimizationOptions.setIpOptOptions ............................................ Passed 0.01 sec

Start 49: optimizationOptions.setCeresOptions

49/80 Test #49: optimizationOptions.setCeresOptions ............................................ Passed 0.01 sec

Start 50: optimizationOptions.fromHDF5

50/80 Test #50: optimizationOptions.fromHDF5 ................................................... Passed 0.02 sec

Start 51: optimizationProblem.quadraticTestFunction

51/80 Test #51: optimizationProblem.quadraticTestFunction ...................................... Passed 0.01 sec

Start 52: optimizationProblem.gradientChecker

52/80 Test #52: optimizationProblem.gradientChecker ............................................ Passed 0.01 sec

Start 53: optimizationProblem.linearModel

53/80 Test #53: optimizationProblem.linearModel ................................................ Passed 0.01 sec

Start 54: optimizationProblem.linearModelToGradientFun

54/80 Test #54: optimizationProblem.linearModelToGradientFun ................................... Passed 0.01 sec

Start 55: optimizationProblem.linearModelToGradientFunOptimization

55/80 Test #55: optimizationProblem.linearModelToGradientFunOptimization ....................... Passed 0.03 sec

Start 56: localOptimizationIpopt.testOptimizationResult

56/80 Test #56: localOptimizationIpopt.testOptimizationResult .................................. Passed 0.04 sec

Start 57: localOptimizationIpopt.testReporterCalled

57/80 Test #57: localOptimizationIpopt.testReporterCalled ...................................... Passed 0.02 sec

Start 58: localOptimizationCeres.testOptimization

58/80 Test #58: localOptimizationCeres.testOptimization ........................................ Passed 0.01 sec

Start 59: localOptimizationCeres.testReporterCalled

59/80 Test #59: localOptimizationCeres.testReporterCalled ...................................... Passed 0.01 sec

Start 60: simulationWorkerAmici.testSerializeResultPackageMessage

60/80 Test #60: simulationWorkerAmici.testSerializeResultPackageMessage ........................ Passed 0.01 sec

Start 61: simulationResultWriter.testResultWriter

61/80 Test #61: simulationResultWriter.testResultWriter ........................................ Passed 0.02 sec

Start 62: simulationResultWriter.testResultWriterNewExistingFile

62/80 Test #62: simulationResultWriter.testResultWriterNewExistingFile ......................... Passed 0.02 sec

Start 63: hierarchicalOptimization1.reader

63/80 Test #63: hierarchicalOptimization1.reader ............................................... Passed 0.01 sec

Start 64: hierarchicalOptimization1.spliceParameters

64/80 Test #64: hierarchicalOptimization1.spliceParameters ..................................... Passed 0.02 sec

Start 65: hierarchicalOptimization1.spliceParametersNothingToDo

65/80 Test #65: hierarchicalOptimization1.spliceParametersNothingToDo .......................... Passed 0.01 sec

Start 66: hierarchicalOptimization1.fillFilteredParams

66/80 Test #66: hierarchicalOptimization1.fillFilteredParams ................................... Passed 0.01 sec

Start 67: hierarchicalOptimization1.likelihoodOfMatchingData

67/80 Test #67: hierarchicalOptimization1.likelihoodOfMatchingData ............................. Passed 0.01 sec

Start 68: hierarchicalOptimization.hierarchicalOptimization

68/80 Test #68: hierarchicalOptimization.hierarchicalOptimization .............................. Passed 0.02 sec

Start 69: hierarchicalOptimization.testNoAnalyticalParameters

69/80 Test #69: hierarchicalOptimization.testNoAnalyticalParameters ............................ Passed 0.01 sec

Start 70: hierarchicalOptimization.testComputeAnalyticalScalings

70/80 Test #70: hierarchicalOptimization.testComputeAnalyticalScalings ......................... Passed 0.02 sec

Start 71: hierarchicalOptimization.testComputeAnalyticalOffsets

71/80 Test #71: hierarchicalOptimization.testComputeAnalyticalOffsets .......................... Passed 0.01 sec

Start 72: hierarchicalOptimization.applyOptimalScaling

72/80 Test #72: hierarchicalOptimization.applyOptimalScaling ................................... Passed 0.01 sec

Start 73: hierarchicalOptimization.applyOptimalOffset

73/80 Test #73: hierarchicalOptimization.applyOptimalOffset .................................... Passed 0.01 sec

Start 74: hierarchicalOptimization.testScaling

74/80 Test #74: hierarchicalOptimization.testScaling ........................................... Passed 0.01 sec

Start 75: hierarchicalOptimization.testWrappedFunIsCalledWithGradient

75/80 Test #75: hierarchicalOptimization.testWrappedFunIsCalledWithGradient .................... Passed 0.01 sec

Start 76: hierarchicalOptimization.problemWrapper

76/80 Test #76: hierarchicalOptimization.problemWrapper ........................................ Passed 0.01 sec

Start 77: example_loadbalancer

77/80 Test #77: example_loadbalancer ........................................................... Passed 0.35 sec

Start 78: parpe_python_package_tests

78/80 Test #78: parpe_python_package_tests ..................................................... Passed 2.78 sec

Start 79: pytest_steadystate_example

79/80 Test #79: pytest_steadystate_example ..................................................... Passed 8.22 sec

Start 80: test_steadystate

80/80 Test #80: test_steadystate ............................................................... Passed 0.02 sec

100% tests passed, 0 tests failed out of 80

Total Test time (real) = 14.70 sec

Model and data¶

This parameter estimation is specified as set of PEtab files which are generated automatically during the build process (see ${PARPE_SOURCE_ROOT}/examples/parpeamici/steadystate). They consist of an SBML model and set of tabular files describing model parameters, model inputs and measurements (training data). The model is automatically converted to C++ code and compiled and linked with parPE during the CMake-based build. The data has been converted to

a HDF5 parPE input file.

The model is loosely based on an AMICI example. It describes the dynamics of three species. The model has six outputs with respective measurements. The measurements are based on model simulations to which artificial noise has been added. Data was simulated to represent four different experimental conditions, each with different model inputs. Some outputs represent model states directly, others are offsetted or scaled. For all outputs additive normally distributed noise is assumed, the standard deviation is known for some measurements and is to be estimated for others.

Here we use libsbml to show the reactions and species described by the model:

[29]:

import libsbml

sbml_file = f'{example_data_dir}/model_steadystate_scaled.sbml'

sbml_reader = libsbml.SBMLReader()

sbml_doc = sbml_reader.readSBML(sbml_file)

sbml_model = sbml_doc.getModel()

dir(sbml_doc)

print('Species: ', [s.getId() for s in sbml_model.getListOfSpecies()])

print('\nReactions:')

for reaction in sbml_model.getListOfReactions():

reactants = ' + '.join(['%s %s'%(int(r.getStoichiometry()) if r.getStoichiometry() > 1 else '', r.getSpecies()) for r in reaction.getListOfReactants()])

products = ' + '.join(['%s %s'%(int(r.getStoichiometry()) if r.getStoichiometry() > 1 else '', r.getSpecies()) for r in reaction.getListOfProducts()])

reversible = '<' if reaction.getReversible() else ''

print('%3s: %10s %1s->%10s\t\t[%s]' % (reaction.getId(),

reactants,

reversible,

products,

libsbml.formulaToL3String(reaction.getKineticLaw().getMath())))

Species: ['x1', 'x2', 'x3']

Reactions:

r1: 2 x1 -> x2 [p1 * x1^2]

r2: x1 + x2 -> x3 [p2 * x1 * x2]

r3: x2 -> 2 x1 [p3 * x2]

r4: x3 -> x1 + x2 [p4 * x3]

r5: x3 -> [k0 * x3]

r6: -> x1 [p5]

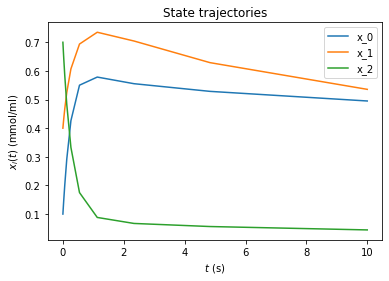

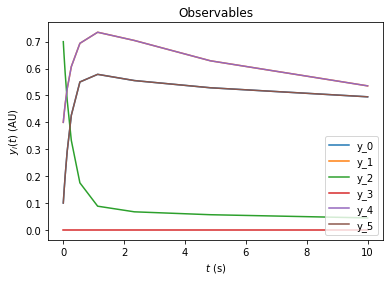

Test simulation¶

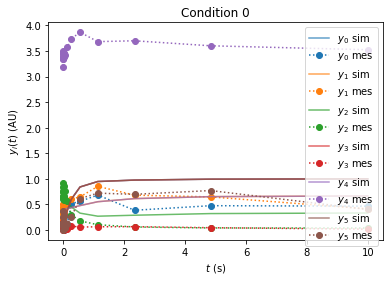

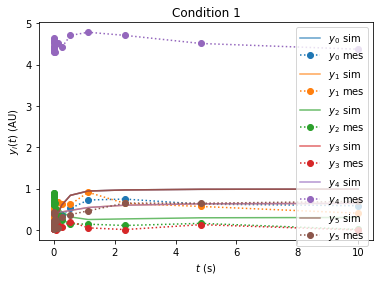

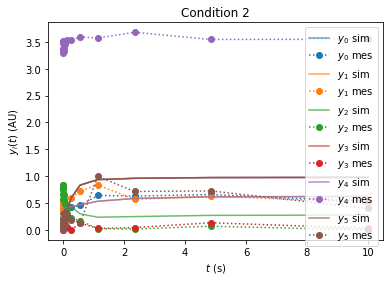

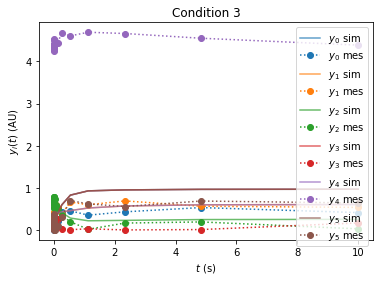

Performing an exemplary model simulation and plotting state and output trajectories:

[30]:

# load model

model_module = amici.import_model_module("model_steadystate_scaled", model_source_dir)

model = model_module.getModel()

model.setTimepoints(amici.DoubleVector(np.logspace(-5, 1, 20)))

solver = model.getSolver()

rdata = amici.runAmiciSimulation(model, solver)

default_parameters = np.array(model.getParameters())

# Default model parameters: print(default_parameters)

# Result fields reported by AMICI: list(rdata.keys())

[31]:

from amici.plotting import plotStateTrajectories

from amici.plotting import plotObservableTrajectories

plotStateTrajectories(rdata)

plotObservableTrajectories(rdata)

Standard optimization Ipopt¶

In this section, model parameters are estimated. parPE supports different optimization algorithms, available algorithms depend on the build configuration. In this section we will use Ipopt, which worked the best in our experience (https://academic.oup.com/bioinformatics/article/36/2/594/5538985).

Based on the parPE template files, a executable for parameter estimation has been built. By default, these executables are named estimate_${MODEL_NAME}. These files require a HDF5 file containing training data and optimization options. All those files have been generated automatically for this example.

This part of the example requires a parPE build with PARPE_ENABLE_IPOPT=ON.

Set parameter estimation options¶

Parameter estimation settings specified inside an HDF5 file. Those can be changed from any programming language with HDF5 bindings, with hdfview (https://www.hdfgroup.org/downloads/hdfview/), or with a helper script included in parPE, as demonstrated here:

[32]:

# enable derivative checker

input_file = f'{example_data_dir}/example_data.h5'

#!{optimization_options_py} {input_file} -s ipopt/derivative_test first-order

#!{optimization_options_py} {input_file} -s ipopt/derivative_test_print_all yes

# Use Ipopt

!{optimization_options_py} {input_file} -s optimizer 0

# Perform one optimizer runs from different starting points

!{optimization_options_py} {input_file} -s numStarts 1

# Run for 20 iterations

!{optimization_options_py} {input_file} -s ipopt/max_iter 30

# Disable hierarchical optimization (see below)

!{optimization_options_py} {input_file} -s hierarchicalOptimization 0

# Print settings

!{optimization_options_py} {input_file}

hierarchicalOptimization 0

numStarts 1

optimizer 0

retryOptimization 1

ceres/max_num_iterations 100

fmincon/GradObj b'on'

fmincon/MaxFunEvals 10000000.0

fmincon/MaxIter 100

fmincon/TolFun 0

fmincon/TolX 1e-08

fmincon/algorithm b'interior-point'

fmincon/display b'iter'

ipopt/acceptable_iter 1

ipopt/acceptable_obj_change_tol 1e-12

ipopt/acceptable_tol 1e+20

ipopt/hessian_approximation b'limited-memory'

ipopt/limited_memory_update_type b'bfgs'

ipopt/max_iter 30

ipopt/tol 1e-09

ipopt/watchdog_shortened_iter_trigger 0

toms611/mxfcal 100000000.0

Gradient check¶

Before starting the optimization, we can shortly compare the objective function gradients computed by AMICI/parPE with finite differences:

[33]:

# use three random starting points:

for i in range(3):

!PARPE_NO_DEBUG=1 {example_binary_dir}/example_steadystate_multi -t gradient_check -o deletemegc/ {input_file}

print()

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 0 g: -3.07804e+14 fd_c: -3.07834e+14 Δ/ff: 1.820688e-04 f: 1.61202e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 1 g: -2.22547e+13 fd_c: -2.23559e+13 Δ/ff: 6.276508e-04 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 2 g: 2.23191e+13 fd_c: 2.23727e+13 Δ/ff: -3.327962e-04 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 3 g: 1.44004e+13 fd_c: 1.43986e+13 Δ/ff: 1.096761e-05 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 4 g: 3.92648e+14 fd_c: 3.92711e+14 Δ/ff: -3.915439e-04 f: 1.61209e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 5 g: 7.22972e+14 fd_c: 7.22972e+14 Δ/ff: -1.633158e-09 f: 1.61212e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 6 g: 1.48764e+07 fd_c: 1.48766e+07 Δ/ff: -8.200509e-13 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 7 g: -4.32827e+10 fd_c: -4.32827e+10 Δ/ff: -9.508153e-12 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] 8 g: 1.82786e+08 fd_c: 1.82784e+08 Δ/ff: 7.928618e-12 f: 1.61205e+14

[2020-06-24 17:41:30] [INF] [-1:140407870474176/] Walltime on master: 0.357358s, CPU time of all processes: 1.190509s

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 0 g: -6.21971e+09 fd_c: -6.21836e+09 Δ/ff: -7.588395e-08 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 1 g: -1.01378e+10 fd_c: -1.01431e+10 Δ/ff: 3.019935e-07 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 2 g: 1.01916e+10 fd_c: 1.01875e+10 Δ/ff: 2.299636e-07 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 3 g: 1.01496e+10 fd_c: 1.01498e+10 Δ/ff: -1.220489e-08 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 4 g: 7.63243e+08 fd_c: 7.61412e+08 Δ/ff: 1.030921e-07 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 5 g: 3.5914e+10 fd_c: 3.5914e+10 Δ/ff: -1.675128e-12 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 6 g: 8.66367e+07 fd_c: 8.66367e+07 Δ/ff: -1.943272e-12 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 7 g: 121.481 fd_c: 195.312 Δ/ff: -4.158002e-12 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] 8 g: 3.66721e+08 fd_c: 3.66721e+08 Δ/ff: -2.067021e-11 f: 1.77565e+13

[2020-06-24 17:41:31] [INF] [-1:140118083160000/] Walltime on master: 0.420946s, CPU time of all processes: 1.274342s

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 0 g: 3.0421e+07 fd_c: 3.04203e+07 Δ/ff: 4.257906e-11 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 1 g: -766.774 fd_c: 195.312 Δ/ff: -5.916097e-11 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 2 g: -9.463e+06 fd_c: -9.46338e+06 Δ/ff: 2.347750e-11 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 3 g: 4.0764e+07 fd_c: 4.07931e+07 Δ/ff: -1.786592e-09 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 4 g: 5.7084e+07 fd_c: 5.7084e+07 Δ/ff: -1.569356e-12 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 5 g: -1.73506 fd_c: 0 Δ/ff: -1.066930e-13 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 6 g: 3.40035e+08 fd_c: 3.40035e+08 Δ/ff: -7.920917e-12 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 7 g: -2.35915e+06 fd_c: -2.35918e+06 Δ/ff: 1.758269e-12 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] 8 g: 1.20306e+08 fd_c: 1.20307e+08 Δ/ff: -7.991474e-12 f: 1.62622e+13

[2020-06-24 17:41:32] [INF] [-1:140466688518080/] Walltime on master: 0.363544s, CPU time of all processes: 1.217862s

Optimize¶

Now we can run the actual optimization.

[34]:

# !(cd {parpe_build_root} && exec make -j12) # rebuild

!rm -rf deleteme # delete old result files

# optimize (using a single process)

!PARPE_NO_DEBUG=1 {example_binary_dir}/example_steadystate_multi -o deleteme/ {example_data_dir}/example_data.h5

[2020-06-24 17:41:33] [INF] [-1:140425994618816/] Reading random initial theta 0 from /optimizationOptions/randomStarts

List of user-set options:

Name Value used

acceptable_iter = 1 yes

acceptable_obj_change_tol = 1e-12 yes

acceptable_tol = 1e+20 yes

hessian_approximation = limited-memory yes

limited_memory_update_type = bfgs yes

max_iter = 30 yes

print_level = 5 yes

print_user_options = yes yes

tol = 1e-09 yes

watchdog_shortened_iter_trigger = 0 yes

******************************************************************************

This program contains Ipopt, a library for large-scale nonlinear optimization.

Ipopt is released as open source code under the Eclipse Public License (EPL).

For more information visit http://projects.coin-or.org/Ipopt

******************************************************************************

This is Ipopt version 3.12.12, running with linear solver ma27.

Number of nonzeros in equality constraint Jacobian...: 0

Number of nonzeros in inequality constraint Jacobian.: 0

Number of nonzeros in Lagrangian Hessian.............: 0

Total number of variables............................: 9

variables with only lower bounds: 0

variables with lower and upper bounds: 9

variables with only upper bounds: 0

Total number of equality constraints.................: 0

Total number of inequality constraints...............: 0

inequality constraints with only lower bounds: 0

inequality constraints with lower and upper bounds: 0

inequality constraints with only upper bounds: 0

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i0] iter: 0 cost: 532.303 time_iter: wall: 0.137419s cpu: 0.127829s time_optim: wall: 0.137419s cpu: 0.127829s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

0 5.3230269e+02 0.00e+00 1.00e+02 0.0 0.00e+00 - 0.00e+00 0.00e+00 0

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i1] iter: 1 cost: 820.034 time_iter: wall: 0.090377s cpu: 0.0783326s time_optim: wall: 0.227797s cpu: 0.206162s

1 8.2003355e+02 0.00e+00 4.00e+01 2.0 5.98e+01 - 9.91e-01 2.66e-02f 2

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i2] iter: 2 cost: 776.643 time_iter: wall: 0.117079s cpu: 0.106627s time_optim: wall: 0.344876s cpu: 0.312788s

2 7.7664256e+02 0.00e+00 5.20e+01 2.2 5.80e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i3] iter: 3 cost: -166.319 time_iter: wall: 0.0932628s cpu: 0.0802567s time_optim: wall: 0.438139s cpu: 0.393045s

3 -1.6631926e+02 0.00e+00 4.12e+01 1.5 2.34e+00 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i4] iter: 4 cost: -267.208 time_iter: wall: 0.0768777s cpu: 0.0693811s time_optim: wall: 0.515016s cpu: 0.462426s

4 -2.6720761e+02 0.00e+00 3.92e+00 0.2 1.85e-01 - 9.91e-01 1.00e+00f 1

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i5] iter: 5 cost: -325.047 time_iter: wall: 0.0737827s cpu: 0.0664222s time_optim: wall: 0.588799s cpu: 0.528848s

5 -3.2504664e+02 0.00e+00 4.86e+00 -1.2 1.59e-01 - 9.84e-01 1.00e+00f 1

[2020-06-24 17:41:33] [INF] [-1:140425699804928/] [o0i6] iter: 6 cost: -336.398 time_iter: wall: 0.0699238s cpu: 0.0622717s time_optim: wall: 0.658723s cpu: 0.59112s

6 -3.3639830e+02 0.00e+00 1.12e+01 -1.9 8.74e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i7] iter: 7 cost: -389.279 time_iter: wall: 0.0860524s cpu: 0.0719065s time_optim: wall: 0.744776s cpu: 0.663026s

7 -3.8927856e+02 0.00e+00 6.91e+00 -1.8 7.08e-01 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i8] iter: 8 cost: -417.125 time_iter: wall: 0.0824051s cpu: 0.0701718s time_optim: wall: 0.827181s cpu: 0.733198s

8 -4.1712507e+02 0.00e+00 2.72e+00 -2.7 5.18e-01 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i9] iter: 9 cost: -425.124 time_iter: wall: 0.0837048s cpu: 0.0707955s time_optim: wall: 0.910886s cpu: 0.803994s

9 -4.2512435e+02 0.00e+00 2.77e+00 -4.1 1.14e-01 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i10] iter: 10 cost: -431.91 time_iter: wall: 0.0717744s cpu: 0.0638436s time_optim: wall: 0.982661s cpu: 0.867837s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

10 -4.3191005e+02 0.00e+00 9.02e-01 -5.5 4.63e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i11] iter: 11 cost: -432.548 time_iter: wall: 0.0821081s cpu: 0.0695296s time_optim: wall: 1.06477s cpu: 0.937367s

11 -4.3254817e+02 0.00e+00 7.07e-01 -7.2 3.94e-02 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i12] iter: 12 cost: -433.253 time_iter: wall: 0.0700837s cpu: 0.0621244s time_optim: wall: 1.13485s cpu: 0.999491s

12 -4.3325317e+02 0.00e+00 5.07e-01 -8.8 3.00e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i13] iter: 13 cost: -434.385 time_iter: wall: 0.0719023s cpu: 0.0637952s time_optim: wall: 1.20676s cpu: 1.06329s

13 -4.3438479e+02 0.00e+00 6.25e-01 -10.3 4.40e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i14] iter: 14 cost: -438.759 time_iter: wall: 0.0663257s cpu: 0.0583022s time_optim: wall: 1.27308s cpu: 1.12159s

14 -4.3875867e+02 0.00e+00 3.98e+00 -11.0 5.21e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i15] iter: 15 cost: -440.48 time_iter: wall: 0.09416s cpu: 0.0771593s time_optim: wall: 1.36724s cpu: 1.19875s

15 -4.4047967e+02 0.00e+00 4.39e+00 -11.0 2.61e+00 - 1.00e+00 6.22e-02f 5

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i16] iter: 16 cost: -442.029 time_iter: wall: 0.0896711s cpu: 0.0733529s time_optim: wall: 1.45691s cpu: 1.2721s

16 -4.4202943e+02 0.00e+00 2.94e+00 -11.0 4.38e-01 - 1.00e+00 1.25e-01f 4

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i17] iter: 17 cost: -443.967 time_iter: wall: 0.0669892s cpu: 0.0584416s time_optim: wall: 1.5239s cpu: 1.33054s

17 -4.4396683e+02 0.00e+00 1.78e+00 -11.0 1.93e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i18] iter: 18 cost: -446.323 time_iter: wall: 0.0676223s cpu: 0.0592318s time_optim: wall: 1.59153s cpu: 1.38977s

18 -4.4632309e+02 0.00e+00 1.06e+00 -11.0 9.67e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:34] [INF] [-1:140425699804928/] [o0i19] iter: 19 cost: -446.807 time_iter: wall: 0.0673716s cpu: 0.0595199s time_optim: wall: 1.6589s cpu: 1.44929s

19 -4.4680666e+02 0.00e+00 7.12e-01 -11.0 1.64e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i20] iter: 20 cost: -446.911 time_iter: wall: 0.098772s cpu: 0.0806446s time_optim: wall: 1.75767s cpu: 1.52994s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

20 -4.4691082e+02 0.00e+00 5.78e-01 -11.0 5.12e-01 - 1.00e+00 3.12e-02f 6

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i21] iter: 21 cost: -447.227 time_iter: wall: 0.066089s cpu: 0.0577964s time_optim: wall: 1.82376s cpu: 1.58774s

21 -4.4722745e+02 0.00e+00 4.61e-02 -11.0 6.51e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i22] iter: 22 cost: -447.228 time_iter: wall: 0.0922707s cpu: 0.0758644s time_optim: wall: 1.91603s cpu: 1.6636s

22 -4.4722816e+02 0.00e+00 4.07e-02 -11.0 3.33e-02 - 1.00e+00 1.25e-01f 4

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i23] iter: 23 cost: -447.228 time_iter: wall: 0.0955807s cpu: 0.0783362s time_optim: wall: 2.01161s cpu: 1.74194s

23 -4.4722838e+02 0.00e+00 5.88e-02 -11.0 3.66e-02 - 1.00e+00 6.25e-02f 5

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i24] iter: 24 cost: -447.23 time_iter: wall: 0.0814274s cpu: 0.0682416s time_optim: wall: 2.09304s cpu: 1.81018s

24 -4.4723019e+02 0.00e+00 2.31e-02 -11.0 3.32e-03 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i25] iter: 25 cost: -447.231 time_iter: wall: 0.0737931s cpu: 0.0615733s time_optim: wall: 2.16683s cpu: 1.87175s

25 -4.4723112e+02 0.00e+00 1.71e-02 -11.0 6.07e-03 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i26] iter: 26 cost: -447.231 time_iter: wall: 0.0784649s cpu: 0.0655171s time_optim: wall: 2.2453s cpu: 1.93727s

26 -4.4723130e+02 0.00e+00 1.31e-02 -11.0 2.84e-03 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i27] iter: 27 cost: -447.232 time_iter: wall: 0.0659078s cpu: 0.0578224s time_optim: wall: 2.3112s cpu: 1.99509s

27 -4.4723151e+02 0.00e+00 1.05e-02 -11.0 1.01e-03 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i28] iter: 28 cost: -447.232 time_iter: wall: 0.0834543s cpu: 0.0689585s time_optim: wall: 2.39466s cpu: 2.06405s

28 -4.4723162e+02 0.00e+00 1.13e-02 -11.0 2.20e-03 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i29] iter: 29 cost: -447.232 time_iter: wall: 0.0683976s cpu: 0.0602698s time_optim: wall: 2.46306s cpu: 2.12432s

29 -4.4723185e+02 0.00e+00 5.13e-03 -11.0 1.09e-03 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i30] iter: 30 cost: -447.232 time_iter: wall: 0.0851599s cpu: 0.0702836s time_optim: wall: 2.54822s cpu: 2.1946s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

30 -4.4723198e+02 0.00e+00 1.25e-02 -11.0 1.23e-02 - 1.00e+00 2.50e-01f 3

Number of Iterations....: 30

(scaled) (unscaled)

Objective...............: -1.0027481219080936e+01 -4.4723198109367854e+02

Dual infeasibility......: 1.2481077281248806e-02 5.5666391157677142e-01

Constraint violation....: 0.0000000000000000e+00 0.0000000000000000e+00

Complementarity.........: 1.0053736651784596e-11 4.4840298993687491e-10

Overall NLP error.......: 1.2481077281248806e-02 5.5666391157677142e-01

Number of objective function evaluations = 130

Number of objective gradient evaluations = 31

Number of equality constraint evaluations = 0

Number of inequality constraint evaluations = 0

Number of equality constraint Jacobian evaluations = 0

Number of inequality constraint Jacobian evaluations = 0

Number of Lagrangian Hessian evaluations = 0

Total CPU secs in IPOPT (w/o function evaluations) = 0.556

Total CPU secs in NLP function evaluations = 2.283

EXIT: Maximum Number of Iterations Exceeded.

[2020-06-24 17:41:35] [INF] [-1:140425699804928/] [o0i31] Optimizer status 1, final llh: -4.472320e+02, time: wall: 2.548704 cpu: 2.194602.

[2020-06-24 17:41:37] [INF] [-1:140425994618816/] Walltime on master: 4.008655s, CPU time of all processes: 3.462642s

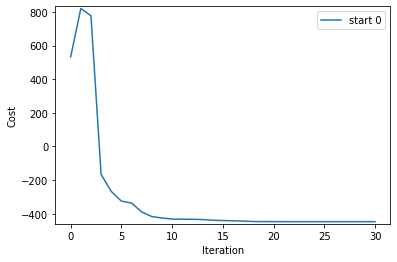

Analyze results¶

A good start for checking the results is a look at optimizer trajectories. This can be easily done using the parPE Python package:

[35]:

filename = 'deleteme/_rank00000.h5'

trajectories_ipopt = parpe.getCostTrajectories(filename)

#print(repr(trajectories))

parpe.plotting.plotCostTrajectory(trajectories_ipopt, log=False);

Since this example uses artificial data based on model simulations with known parameters, we can compare them to the optimization results:

[36]:

parpe.compare_optimization_results_to_true_parameters('deleteme/_rank00000.h5')

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.30485 -0.69515 -0.69515 p1

1: 0.50000 0.31305 -0.18695 -0.37391 p2

2: 0.40000 0.16030 -0.23970 -0.59925 p3

3: 2.00000 1.81174 -0.18826 -0.09413 p4

4: 0.10000 0.05128 -0.04872 -0.48718 p5

5: 2.00000 0.16231 -1.83769 -0.91885 scaling_x1_common

6: 3.00000 2.99955 -0.00045 -0.00015 offset_x2_batch_0

7: 0.20000 0.13729 -0.06271 -0.31357 x1withsigma_sigma

8: 4.00000 4.00132 0.00132 0.00033 offset_x2_batch_1

Status: 1

Cost: -447.231981 (expected: -0.000000)

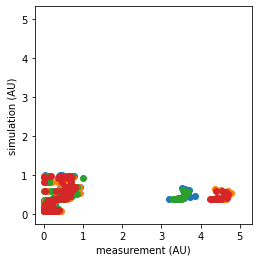

The parameters don’t match that greatly. This is not completely unexpected due to the artificial noise introduced. To get a closer look at the optimization result, we can compare the training data to the model output with the optimized parameters.

The model outputs are by default not saved during optimization, because this can lead to much I/O overhead and large files. However, there is an executable to quickly rerun simulation with the optimal parameters:

[37]:

# Simulate with optimal parameters, save results

!rm -f sim.h5 # remove files from previous run

!{example_binary_dir}/example_steadystate_multi_simulator deleteme/_rank00000.h5 /inputData deleteme/_rank00000.h5 /inputData sim.h5 / --at-optimum --nompi --nocompute-inner

Running --at-optimum for

conditions from deleteme/_rank00000.h5:/inputData and

parameters from deleteme/_rank00000.h5:/inputData

> sim.h5:/

Running for start 0

Starting simulation. Number of conditions: 4

[38]:

# Load simulated outputs

(measured, simulated, timepoints, llh) = parpe.readSimulationsFromFile('sim.h5')

start_idx = '0'

[39]:

# Plot correlation of measurements (training data) and model simulation with optimized parameters

parpe.plotting.plotCorrelation(measured[start_idx], simulated[start_idx])

[39]:

<matplotlib.axes._subplots.AxesSubplot at 0x7fc10fbead60>

[40]:

# Plot measurement trajectories (training data) and compare to model simulation with optimized parameters

parpe.plotting.plotTrajectoryFits(measured[start_idx], simulated[start_idx], timepoints[start_idx])

Changing optimizers - Ceres¶

Once parPE has been built with support for different optimizers, selecting a different optimizer is quite easy.

(This part of the example requires a parPE build with PARPE_ENABLE_CERES=ON)

[41]:

# copy input file from above example

example_data_ceres = f'{example_data_dir}/example_data_ceres.h5'

!cp {example_data_dir}/example_data.h5 {example_data_ceres}

# Change optimizer to CERES

# NOTE: For this moment, optimizers are inconveniently selected based on numeric values, 0 is Ipopt, 1 is Ceres.

!{optimization_options_py} {example_data_ceres} -s optimizer 1

# Run optimization

!PARPE_NO_DEBUG=1 {mpiexec} {example_binary_dir}/example_steadystate_multi -o deletemeceres/ {example_data_ceres} --mpi

[2020-06-24 17:41:41] [INF] [0:139880673687488/dweindl-ThinkPad-L480] Running with 4 MPI processes.

[2020-06-24 17:41:41] [INF] [0:139880673687488/dweindl-ThinkPad-L480] Reading random initial theta 0 from /optimizationOptions/randomStarts

callbacks.cc:105 0: f: 5.323027e+02 d: 0.00e+00 g: 4.46e+03 h: 0.00e+00 s: 0.00e+00 e: 0 it: 4.13e-02 tt: 4.13e-02

[2020-06-24 17:41:41] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i0] iter: 0 cost: 532.303 time_iter: wall: 0.0415566s cpu: 0.0809107s time_optim: wall: 0.0415569s cpu: 0.0809107s

callbacks.cc:105 1: f:-3.344605e+02 d: 8.67e+02 g: 1.67e+02 h: 1.13e+00 s: 2.24e-04 e: 1 it: 3.72e-02 tt: 7.95e-02

[2020-06-24 17:41:41] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i1] iter: 1 cost: -334.461 time_iter: wall: 0.0382083s cpu: 0.0764133s time_optim: wall: 0.0797655s cpu: 0.157324s

callbacks.cc:105 2: f:-3.788412e+02 d: 4.44e+01 g: 3.85e+02 h: 3.29e-01 s: 1.28e-03 e: 5 it: 1.56e-01 tt: 2.36e-01

[2020-06-24 17:41:41] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i2] iter: 2 cost: -378.841 time_iter: wall: 0.156987s cpu: 0.293226s time_optim: wall: 0.236753s cpu: 0.45055s

callbacks.cc:105 3: f:-4.183630e+02 d: 3.95e+01 g: 2.30e+02 h: 3.95e-01 s: 4.06e-04 e: 1 it: 3.77e-02 tt: 2.75e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i3] iter: 3 cost: -418.363 time_iter: wall: 0.0381825s cpu: 0.0691572s time_optim: wall: 0.274936s cpu: 0.519708s

callbacks.cc:105 4: f:-4.189047e+02 d: 5.42e-01 g: 2.85e+02 h: 4.76e-02 s: 5.58e-04 e: 2 it: 8.96e-02 tt: 3.65e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i4] iter: 4 cost: -418.905 time_iter: wall: 0.0901106s cpu: 0.161033s time_optim: wall: 0.365047s cpu: 0.68074s

callbacks.cc:105 5: f:-4.197841e+02 d: 8.79e-01 g: 2.78e+02 h: 2.46e-02 s: 3.88e-04 e: 1 it: 3.98e-02 tt: 4.05e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i5] iter: 5 cost: -419.784 time_iter: wall: 0.0402574s cpu: 0.0745627s time_optim: wall: 0.405304s cpu: 0.755303s

callbacks.cc:105 6: f:-4.207404e+02 d: 9.56e-01 g: 2.96e+02 h: 6.32e-02 s: 1.52e-03 e: 1 it: 3.93e-02 tt: 4.45e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i6] iter: 6 cost: -420.74 time_iter: wall: 0.0398202s cpu: 0.0781893s time_optim: wall: 0.445125s cpu: 0.833492s

callbacks.cc:105 7: f:-4.223504e+02 d: 1.61e+00 g: 2.93e+02 h: 8.02e-02 s: 1.09e-03 e: 1 it: 3.71e-02 tt: 4.82e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i7] iter: 7 cost: -422.35 time_iter: wall: 0.0375706s cpu: 0.0742174s time_optim: wall: 0.482696s cpu: 0.907709s

callbacks.cc:105 8: f:-4.252214e+02 d: 2.87e+00 g: 2.84e+02 h: 1.55e-01 s: 2.42e-03 e: 1 it: 3.77e-02 tt: 5.21e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i8] iter: 8 cost: -425.221 time_iter: wall: 0.0381947s cpu: 0.0754364s time_optim: wall: 0.520891s cpu: 0.983146s

callbacks.cc:105 9: f:-4.291242e+02 d: 3.90e+00 g: 2.72e+02 h: 4.47e-01 s: 5.38e-02 e: 1 it: 3.75e-02 tt: 5.59e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i9] iter: 9 cost: -429.124 time_iter: wall: 0.0379482s cpu: 0.0749299s time_optim: wall: 0.558839s cpu: 1.05808s

callbacks.cc:105 10: f:-4.358720e+02 d: 6.75e+00 g: 2.09e+02 h: 5.84e-02 s: 1.20e-01 e: 1 it: 3.65e-02 tt: 5.96e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i10] iter: 10 cost: -435.872 time_iter: wall: 0.036973s cpu: 0.0729143s time_optim: wall: 0.595813s cpu: 1.13099s

callbacks.cc:105 11: f:-4.441651e+02 d: 8.29e+00 g: 3.97e+01 h: 2.26e-01 s: 7.51e-01 e: 1 it: 3.93e-02 tt: 6.35e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i11] iter: 11 cost: -444.165 time_iter: wall: 0.0397685s cpu: 0.0773617s time_optim: wall: 0.635581s cpu: 1.20835s

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i12] iter: 12 cost: -445.062 time_iter: wall: 0.0374097s cpu: 0.0724785s time_optim: wall: 0.672991s cpu: 1.28083s

callbacks.cc:105 12: f:-4.450617e+02 d: 8.97e-01 g: 1.17e+01 h: 6.36e-02 s: 1.00e+00 e: 1 it: 3.65e-02 tt: 6.73e-01

callbacks.cc:105 13: f:-4.451359e+02 d: 7.42e-02 g: 1.17e+01 h: 5.40e-02 s: 1.00e+00 e: 1 it: 4.10e-02 tt: 7.14e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i13] iter: 13 cost: -445.136 time_iter: wall: 0.0415508s cpu: 0.0775626s time_optim: wall: 0.714542s cpu: 1.35839s

callbacks.cc:105 14: f:-4.452398e+02 d: 1.04e-01 g: 1.16e+01 h: 5.48e-02 s: 1.00e+00 e: 1 it: 3.74e-02 tt: 7.52e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i14] iter: 14 cost: -445.24 time_iter: wall: 0.0379656s cpu: 0.0741554s time_optim: wall: 0.752508s cpu: 1.43255s

callbacks.cc:105 15: f:-4.454254e+02 d: 1.86e-01 g: 1.15e+01 h: 8.01e-02 s: 6.39e-01 e: 1 it: 4.32e-02 tt: 7.96e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i15] iter: 15 cost: -445.425 time_iter: wall: 0.0437119s cpu: 0.0795556s time_optim: wall: 0.79622s cpu: 1.5121s

callbacks.cc:105 16: f:-4.457760e+02 d: 3.51e-01 g: 1.08e+01 h: 1.41e-01 s: 2.84e-01 e: 1 it: 3.65e-02 tt: 8.33e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i16] iter: 16 cost: -445.776 time_iter: wall: 0.036956s cpu: 0.0727817s time_optim: wall: 0.833176s cpu: 1.58489s

callbacks.cc:105 17: f:-4.460990e+02 d: 3.23e-01 g: 1.73e+01 h: 2.69e-01 s: 1.62e-01 e: 1 it: 3.91e-02 tt: 8.72e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i17] iter: 17 cost: -446.099 time_iter: wall: 0.0395317s cpu: 0.0750284s time_optim: wall: 0.872708s cpu: 1.65991s

callbacks.cc:105 18: f:-4.465246e+02 d: 4.26e-01 g: 9.07e+00 h: 6.18e-02 s: 8.06e-01 e: 1 it: 3.75e-02 tt: 9.10e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i18] iter: 18 cost: -446.525 time_iter: wall: 0.0380117s cpu: 0.0768193s time_optim: wall: 0.91072s cpu: 1.73673s

callbacks.cc:105 19: f:-4.469159e+02 d: 3.91e-01 g: 8.47e+00 h: 1.57e-01 s: 1.00e+00 e: 1 it: 3.74e-02 tt: 9.48e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i19] iter: 19 cost: -446.916 time_iter: wall: 0.0378456s cpu: 0.0734315s time_optim: wall: 0.948566s cpu: 1.81016s

callbacks.cc:105 20: f:-4.470190e+02 d: 1.03e-01 g: 7.25e+00 h: 3.44e-02 s: 1.00e+00 e: 1 it: 3.77e-02 tt: 9.86e-01

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i20] iter: 20 cost: -447.019 time_iter: wall: 0.0381489s cpu: 0.0755738s time_optim: wall: 0.986715s cpu: 1.88574s

callbacks.cc:105 21: f:-4.470725e+02 d: 5.35e-02 g: 3.81e+00 h: 4.45e-02 s: 1.00e+00 e: 1 it: 3.64e-02 tt: 1.02e+00

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i21] iter: 21 cost: -447.072 time_iter: wall: 0.0368396s cpu: 0.0732056s time_optim: wall: 1.02356s cpu: 1.95894s

callbacks.cc:105 22: f:-4.471107e+02 d: 3.82e-02 g: 5.08e+00 h: 7.65e-02 s: 4.64e-02 e: 1 it: 3.71e-02 tt: 1.06e+00

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i22] iter: 22 cost: -447.111 time_iter: wall: 0.0375187s cpu: 0.0742591s time_optim: wall: 1.06107s cpu: 2.0332s

callbacks.cc:105 23: f:-4.471110e+02 d: 3.08e-04 g: 4.69e+00 h: 6.83e-04 s: 1.81e-03 e: 2 it: 7.50e-02 tt: 1.14e+00

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i23] iter: 23 cost: -447.111 time_iter: wall: 0.0754316s cpu: 0.152713s time_optim: wall: 1.13651s cpu: 2.18592s

callbacks.cc:105 24: f:-4.471154e+02 d: 4.41e-03 g: 6.50e+00 h: 5.26e-03 s: 9.69e-03 e: 2 it: 7.74e-02 tt: 1.21e+00

[2020-06-24 17:41:42] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i24] iter: 24 cost: -447.115 time_iter: wall: 0.0778265s cpu: 0.153936s time_optim: wall: 1.21433s cpu: 2.33985s

callbacks.cc:105 25: f:-4.471214e+02 d: 5.97e-03 g: 6.20e+00 h: 8.19e-03 s: 5.28e-04 e: 1 it: 3.64e-02 tt: 1.25e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i25] iter: 25 cost: -447.121 time_iter: wall: 0.0368165s cpu: 0.0730412s time_optim: wall: 1.25115s cpu: 2.41289s

callbacks.cc:105 26: f:-4.471818e+02 d: 6.04e-02 g: 6.25e+00 h: 9.16e-02 s: 4.15e-03 e: 2 it: 7.55e-02 tt: 1.33e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i26] iter: 26 cost: -447.182 time_iter: wall: 0.0760229s cpu: 0.14886s time_optim: wall: 1.32717s cpu: 2.56175s

callbacks.cc:105 27: f:-4.471928e+02 d: 1.10e-02 g: 6.28e+00 h: 1.63e-02 s: 1.47e-03 e: 2 it: 7.39e-02 tt: 1.40e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i27] iter: 27 cost: -447.193 time_iter: wall: 0.0743093s cpu: 0.149165s time_optim: wall: 1.40148s cpu: 2.71092s

callbacks.cc:105 28: f:-4.471946e+02 d: 1.84e-03 g: 5.48e+00 h: 7.66e-03 s: 2.21e-02 e: 2 it: 7.66e-02 tt: 1.48e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i28] iter: 28 cost: -447.195 time_iter: wall: 0.076984s cpu: 0.15148s time_optim: wall: 1.47847s cpu: 2.8624s

callbacks.cc:105 29: f:-4.472208e+02 d: 2.61e-02 g: 2.36e+00 h: 1.22e-02 s: 2.82e-01 e: 2 it: 7.30e-02 tt: 1.55e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i29] iter: 29 cost: -447.221 time_iter: wall: 0.0734252s cpu: 0.146759s time_optim: wall: 1.55189s cpu: 3.00916s

callbacks.cc:105 30: f:-4.472269e+02 d: 6.11e-03 g: 2.56e+00 h: 3.82e-02 s: 1.00e+00 e: 1 it: 3.69e-02 tt: 1.59e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i30] iter: 30 cost: -447.227 time_iter: wall: 0.037355s cpu: 0.0741047s time_optim: wall: 1.58925s cpu: 3.08326s

callbacks.cc:105 31: f:-4.472342e+02 d: 7.31e-03 g: 1.12e+00 h: 1.67e-02 s: 7.05e-01 e: 1 it: 3.68e-02 tt: 1.63e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i31] iter: 31 cost: -447.234 time_iter: wall: 0.0372581s cpu: 0.0736081s time_optim: wall: 1.62651s cpu: 3.15687s

callbacks.cc:105 32: f:-4.472355e+02 d: 1.29e-03 g: 1.60e-01 h: 6.13e-03 s: 1.00e+00 e: 1 it: 3.84e-02 tt: 1.67e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i32] iter: 32 cost: -447.235 time_iter: wall: 0.0388177s cpu: 0.0753357s time_optim: wall: 1.66532s cpu: 3.23221s

callbacks.cc:105 33: f:-4.472360e+02 d: 4.63e-04 g: 1.61e-01 h: 1.32e-02 s: 1.00e+00 e: 1 it: 3.70e-02 tt: 1.70e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i33] iter: 33 cost: -447.236 time_iter: wall: 0.0374512s cpu: 0.0755949s time_optim: wall: 1.70277s cpu: 3.3078s

callbacks.cc:105 34: f:-4.472361e+02 d: 1.07e-04 g: 1.54e-02 h: 6.50e-03 s: 1.00e+00 e: 1 it: 3.63e-02 tt: 1.74e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i34] iter: 34 cost: -447.236 time_iter: wall: 0.036703s cpu: 0.0725629s time_optim: wall: 1.73948s cpu: 3.38036s

callbacks.cc:105 35: f:-4.472361e+02 d: 1.71e-06 g: 4.67e-03 h: 8.97e-04 s: 1.00e+00 e: 1 it: 3.81e-02 tt: 1.78e+00

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i35] iter: 35 cost: -447.236 time_iter: wall: 0.0385312s cpu: 0.076953s time_optim: wall: 1.77801s cpu: 3.45732s

line_search.cc:758 Line search failed: Wolfe zoom bracket width: 4.68275e-06 too small with descent_direction_max_norm: 8.19905e-05.

line_search_minimizer.cc:413 Terminating: Parameter tolerance reached. Relative step_norm: 0.000000e+00 <= 1.000000e-08.

[2020-06-24 17:41:43] [INF] [0:139880252290816/dweindl-ThinkPad-L480] [o0i36] Optimizer status 0, final llh: -4.472361e+02, time: wall: 1.970559 cpu: 3.852414.

[2020-06-24 17:41:44] [INF] [0:139880673687488/dweindl-ThinkPad-L480] Walltime on master: 3.286791s, CPU time of all processes: 13.255544s

[2020-06-24 17:41:44] [INF] [0:139880673687488/dweindl-ThinkPad-L480] Sent termination signal to workers.

[42]:

ceres_optimization_result_filename = 'deletemeceres/_rank00000.h5'

trajectories_ceres = parpe.getCostTrajectories(ceres_optimization_result_filename)

#print(repr(trajectories))

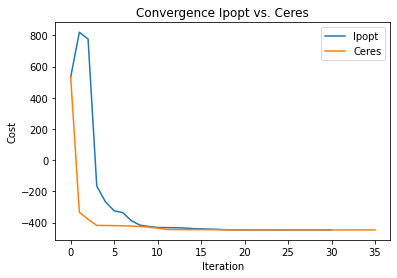

ax = parpe.plotting.plotCostTrajectory(trajectories_ipopt, log=False);

parpe.plotting.plotCostTrajectory(trajectories_ceres, log=False, ax=ax);

ax.autoscale(True)

ax.legend(['Ipopt', 'Ceres'])

ax.set_title('Convergence Ipopt vs. Ceres');

[43]:

parpe.compare_optimization_results_to_true_parameters(ceres_optimization_result_filename)

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.30548 -0.69452 -0.69452 p1

1: 0.50000 0.30052 -0.19948 -0.39895 p2

2: 0.40000 0.16337 -0.23663 -0.59158 p3

3: 2.00000 1.81043 -0.18957 -0.09478 p4

4: 0.10000 0.04597 -0.05403 -0.54034 p5

5: 2.00000 0.16233 -1.83767 -0.91883 scaling_x1_common

6: 3.00000 2.99965 -0.00035 -0.00012 offset_x2_batch_0

7: 0.20000 0.13703 -0.06297 -0.31484 x1withsigma_sigma

8: 4.00000 4.00120 0.00120 0.00030 offset_x2_batch_1

Status: 0

Cost: -447.236062 (expected: -0.000000)

[43]: