parPE example: steadystate model - hierarchical optimization¶

This notebook demonstrates the use of parPE for parameter estimation using a recently developed hierarchical optimization approach (https://doi.org/10.1093/bioinformatics/btz581).

The model and data used in this notebook are described in more detail in parpeExampleSteadystateBasic.ipynb.

Prerequisites¶

The prerequisites mentioned in parpeExampleSteadystateBasic.ipynb also apply to this notebook.

[1]:

import amici

import os

import sys

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import h5py

from importlib import reload

import parpe

# set paths

parpe_source_root = os.path.abspath('../../../')

parpe_build_root = os.path.join(parpe_source_root, 'build')

model_source_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/steadystate_scaled-prefix/src/steadystate_scaled/model_steadystate_scaled'

example_binary_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/'

example_data_dir = f'{parpe_build_root}/examples/parpeamici/steadystate/steadystate_scaled-prefix/src/steadystate_scaled'

optimization_options_py = f'{parpe_source_root}/misc/optimizationOptions.py'

# MPI launcher and options

mpiexec = "mpiexec -n 4 --allow-run-as-root --oversubscribe"

[2]:

# rebuild example

!cd {parpe_build_root} && make

-- Found Git: /usr/bin/git (found version "2.25.1")

-- Building version parPE-v0.4.3-41-g696ed

[ 0%] Built target get_version

[ 10%] Built target parpecommon

Scanning dependencies of target parpeoptimization

[ 11%] Building CXX object src/parpeoptimization/CMakeFiles/parpeoptimization.dir/optimizationResultWriter.cpp.o

[ 12%] Linking CXX static library libparpeoptimization-dbg.a

[ 21%] Built target parpeoptimization

[ 25%] Built target parpeloadbalancer

Scanning dependencies of target parpeamici

[ 26%] Building CXX object src/parpeamici/CMakeFiles/parpeamici.dir/optimizationApplication.cpp.o

[ 27%] Linking CXX static library libparpeamici-dbg.a

[ 37%] Built target parpeamici

[ 39%] Built target parpe

[ 45%] Built target unittests_common

[ 50%] Built target unittests_loadbalancer

[ 51%] Linking CXX executable unittests_optimization

[ 58%] Built target unittests_optimization

Setting up virtual environment...

[ 58%] Built target setup_venv

[ 59%] Creating test data using hierarchicalOptimizationTest.py

...

----------------------------------------------------------------------

Ran 3 tests in 0.000s

OK

[ 59%] Built target prepare_test_hierarchical_optimization

[ 60%] Linking CXX executable unittests_amici

[ 67%] Built target unittests_amici

[ 69%] Built target example_loadbalancer

[ 70%] Performing build step for 'steadystate_scaled'

[ 90%] Built target model_steadystate_scaled

[ 94%] Built target simulate_model_steadystate_scaled

[ 96%] Built target model_steadystate_scaled_swig_compilation

[100%] Built target _model_steadystate_scaled

[ 72%] No install step for 'steadystate_scaled'

[ 73%] No test step for 'steadystate_scaled'

[ 74%] Completed 'steadystate_scaled'

[ 81%] Built target steadystate_scaled

[ 82%] Linking CXX executable example_steadystate

[ 84%] Built target example_steadystate

[ 86%] Linking CXX executable example_steadystate_multi

[ 88%] Built target example_steadystate_multi

[ 89%] Linking CXX executable example_steadystate_multi_simulator

[ 92%] Built target example_steadystate_multi_simulator

[ 93%] Linking CXX executable test_steadystate

[100%] Built target test_steadystate

[3]:

# run make test to generated all output files required below

#!cd {parpe_build_root} && make test

Hierarchical optimization¶

Depending on the type of training data, the parameter estimation may contain a large number of output or nuisance parameters necessary to model the measurement process and transform model states to the actually measured quantity. These parameters are required for model parameterization, but don’t affect the model states. This could for example be scaling parameters, in the case of relative measurements.

We recently developed an hierarchical optimization approach, that allows to analytically compute scaling, offset and sigma parameters. For large models, this can greatly improve optimizer convergence, and therefore, computation time (https://doi.org/10.1093/bioinformatics/btz581).

In this hierarchical approach, the optimization problems is split into dynamic parameters, influencing the state of the system, and static or output parameters, which do not influence the state of system, but only its outputs. The numeric optimization algorithm is used to optimize the dynamic parameters only. Given these dynamic parameters, we can determine locally optimal values for some output parameters (in this example: all) analytically during each iteration. More detailed descriptions and the theoretical basis are provided in https://doi.org/10.1093/bioinformatics/bty514, https://doi.org/10.1093/bioinformatics/btz581, and the respective supplementary information.

Optimize¶

[4]:

hdf5_input_file_hierarchical = f'{example_data_dir}/example_data.h5'

hdf5_pe_output_file_hierarchical = 'deletemehierarchical/_rank00000.h5'

# Enabling hierarchical optimization in parPE is as easy as this:

!{optimization_options_py} {hdf5_input_file_hierarchical} -s hierarchicalOptimization 1

# Set some additional options, independently of hierarchical optimization

!{optimization_options_py} {hdf5_input_file_hierarchical} -s numStarts 1

!{optimization_options_py} {hdf5_input_file_hierarchical} -s ipopt/max_iter 20

!{optimization_options_py} {hdf5_input_file_hierarchical} -s ipopt/acceptable_obj_change_tol 1e-5

!{optimization_options_py} {hdf5_input_file_hierarchical} -s ipopt/acceptable_tol 1e-5

!{optimization_options_py} {hdf5_input_file_hierarchical} -s retryOptimization 0

# Print current settings

!{optimization_options_py} {hdf5_input_file_hierarchical}

hierarchicalOptimization 1

numStarts 1

optimizer 0

retryOptimization 0

ceres/max_num_iterations 100

fmincon/GradObj b'on'

fmincon/MaxFunEvals 10000000.0

fmincon/MaxIter 100

fmincon/TolFun 0

fmincon/TolX 1e-08

fmincon/algorithm b'interior-point'

fmincon/display b'iter'

ipopt/acceptable_iter 1

ipopt/acceptable_obj_change_tol 1e-05

ipopt/acceptable_tol 1e-05

ipopt/hessian_approximation b'limited-memory'

ipopt/limited_memory_update_type b'bfgs'

ipopt/max_iter 20

ipopt/tol 1e-09

ipopt/watchdog_shortened_iter_trigger 0

toms611/mxfcal 100000000.0

[5]:

# Before starting the optimization, we can shortly compare the objective function gradients computed by AMICI/parPE with finite differences:

!PARPE_NO_DEBUG=1 {example_binary_dir}/example_steadystate_multi -t gradient_check -o deletemegc/ {hdf5_input_file_hierarchical}

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] 0 g: 3.70264e+06 fd_c: 3.71926e+06 Δ/ff: -1.316027e-05 f: 1.26298e+09

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] 1 g: -6.39635e+06 fd_c: -6.37737e+06 Δ/ff: -1.502652e-05 f: 1.26298e+09

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] 2 g: -646595 fd_c: -674557 Δ/ff: 2.213978e-05 f: 1.26298e+09

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] 3 g: 5.96119e+06 fd_c: 5.96607e+06 Δ/ff: -3.868144e-06 f: 1.26298e+09

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] 4 g: 5.80955e+09 fd_c: 5.80954e+09 Δ/ff: 1.416672e-05 f: 1.26303e+09

[2020-06-24 17:51:09] [INF] [-1:140661450438592/] Walltime on master: 0.245290s, CPU time of all processes: 1.148713s

[6]:

# Run the actual optimization (using MPI with 4 processes, 1 master, 3 workers)

!PARPE_NO_DEBUG=1 {mpiexec} {example_binary_dir}/example_steadystate_multi -o deletemehierarchical/ {hdf5_input_file_hierarchical} --mpi

[2020-06-24 17:51:10] [INF] [0:139634931677120/dweindl-ThinkPad-L480] Running with 4 MPI processes.

[2020-06-24 17:51:10] [INF] [0:139634931677120/dweindl-ThinkPad-L480] Reading random initial theta 0 from /optimizationOptions/randomStarts

List of user-set options:

Name Value used

acceptable_iter = 1 yes

acceptable_obj_change_tol = 1e-05 yes

acceptable_tol = 1e-05 yes

hessian_approximation = limited-memory yes

limited_memory_update_type = bfgs yes

max_iter = 20 yes

print_level = 5 yes

print_user_options = yes yes

tol = 1e-09 yes

watchdog_shortened_iter_trigger = 0 yes

******************************************************************************

This program contains Ipopt, a library for large-scale nonlinear optimization.

Ipopt is released as open source code under the Eclipse Public License (EPL).

For more information visit http://projects.coin-or.org/Ipopt

******************************************************************************

This is Ipopt version 3.12.12, running with linear solver ma27.

Number of nonzeros in equality constraint Jacobian...: 0

Number of nonzeros in inequality constraint Jacobian.: 0

Number of nonzeros in Lagrangian Hessian.............: 0

Total number of variables............................: 5

variables with only lower bounds: 0

variables with lower and upper bounds: 5

variables with only upper bounds: 0

Total number of equality constraints.................: 0

Total number of inequality constraints...............: 0

inequality constraints with only lower bounds: 0

inequality constraints with lower and upper bounds: 0

inequality constraints with only upper bounds: 0

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i0] iter: 0 cost: -439.904 time_iter: wall: 0.0541594s cpu: 0.153553s time_optim: wall: 0.0541598s cpu: 0.153553s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

0 -4.3990444e+02 0.00e+00 4.56e+01 0.0 0.00e+00 - 0.00e+00 0.00e+00 0

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i1] iter: 1 cost: -440.11 time_iter: wall: 0.131574s cpu: 0.201234s time_optim: wall: 0.185734s cpu: 0.354787s

1 -4.4011049e+02 0.00e+00 4.40e+01 1.4 2.88e+01 - 4.36e-01 1.78e-03f 7

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i2] iter: 2 cost: -441.25 time_iter: wall: 0.0601212s cpu: 0.144102s time_optim: wall: 0.245855s cpu: 0.498889s

2 -4.4125031e+02 0.00e+00 2.11e+01 -0.6 1.71e-02 - 9.97e-01 1.00e+00f 1

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i3] iter: 3 cost: -441.793 time_iter: wall: 0.0587328s cpu: 0.140642s time_optim: wall: 0.304588s cpu: 0.639531s

3 -4.4179296e+02 0.00e+00 1.46e+01 -2.2 2.25e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i4] iter: 4 cost: -442.644 time_iter: wall: 0.0593916s cpu: 0.140892s time_optim: wall: 0.36398s cpu: 0.780423s

4 -4.4264439e+02 0.00e+00 1.06e+01 -3.9 4.32e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i5] iter: 5 cost: -443.123 time_iter: wall: 0.0812911s cpu: 0.150629s time_optim: wall: 0.445271s cpu: 0.931052s

5 -4.4312253e+02 0.00e+00 3.58e+01 -5.4 1.63e-01 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i6] iter: 6 cost: -444.538 time_iter: wall: 0.0535397s cpu: 0.116163s time_optim: wall: 0.498811s cpu: 1.04721s

6 -4.4453768e+02 0.00e+00 5.15e+01 -6.6 4.71e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:10] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i7] iter: 7 cost: -446.577 time_iter: wall: 0.0549543s cpu: 0.122927s time_optim: wall: 0.553766s cpu: 1.17014s

7 -4.4657694e+02 0.00e+00 3.04e+01 -7.2 1.12e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i8] iter: 8 cost: -447.196 time_iter: wall: 0.0552537s cpu: 0.119712s time_optim: wall: 0.60902s cpu: 1.28985s

8 -4.4719614e+02 0.00e+00 2.47e+00 -8.8 3.40e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i9] iter: 9 cost: -447.227 time_iter: wall: 0.0555729s cpu: 0.120651s time_optim: wall: 0.664593s cpu: 1.4105s

9 -4.4722728e+02 0.00e+00 1.31e+00 -10.5 2.98e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i10] iter: 10 cost: -447.229 time_iter: wall: 0.0754439s cpu: 0.140183s time_optim: wall: 0.740037s cpu: 1.55069s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

10 -4.4722879e+02 0.00e+00 1.45e+00 -11.0 2.70e-02 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i11] iter: 11 cost: -447.232 time_iter: wall: 0.0666346s cpu: 0.12471s time_optim: wall: 0.806672s cpu: 1.6754s

11 -4.4723183e+02 0.00e+00 6.10e-01 -11.0 1.32e-02 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i12] iter: 12 cost: -447.232 time_iter: wall: 0.0628816s cpu: 0.122932s time_optim: wall: 0.869554s cpu: 1.79833s

12 -4.4723222e+02 0.00e+00 1.03e+00 -11.0 1.69e-02 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i13] iter: 13 cost: -447.235 time_iter: wall: 0.051293s cpu: 0.112279s time_optim: wall: 0.920847s cpu: 1.91061s

13 -4.4723457e+02 0.00e+00 5.17e-01 -11.0 1.56e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i14] iter: 14 cost: -447.235 time_iter: wall: 0.0832425s cpu: 0.141398s time_optim: wall: 1.00409s cpu: 2.05201s

14 -4.4723480e+02 0.00e+00 1.11e-01 -11.0 2.44e-02 - 1.00e+00 1.25e-01f 4

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i15] iter: 15 cost: -447.236 time_iter: wall: 0.0628442s cpu: 0.121933s time_optim: wall: 1.06693s cpu: 2.17394s

15 -4.4723572e+02 0.00e+00 4.75e-01 -11.0 6.24e-02 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i16] iter: 16 cost: -447.236 time_iter: wall: 0.0731104s cpu: 0.142942s time_optim: wall: 1.14004s cpu: 2.31688s

16 -4.4723594e+02 0.00e+00 3.20e-01 -11.0 2.53e-03 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i17] iter: 17 cost: -447.236 time_iter: wall: 0.0522144s cpu: 0.118318s time_optim: wall: 1.19226s cpu: 2.4352s

17 -4.4723597e+02 0.00e+00 2.59e-01 -11.0 7.42e-03 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i18] iter: 18 cost: -447.236 time_iter: wall: 0.0943844s cpu: 0.150501s time_optim: wall: 1.28664s cpu: 2.5857s

18 -4.4723601e+02 0.00e+00 1.58e-01 -11.0 6.68e-02 - 1.00e+00 3.12e-02f 6

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i19] iter: 19 cost: -447.236 time_iter: wall: 0.0741556s cpu: 0.137393s time_optim: wall: 1.3608s cpu: 2.72309s

19 -4.4723601e+02 0.00e+00 2.40e-01 -11.0 1.91e-02 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i20] iter: 20 cost: -447.236 time_iter: wall: 0.0900518s cpu: 0.153743s time_optim: wall: 1.45085s cpu: 2.87684s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

20 -4.4723605e+02 0.00e+00 4.28e-02 -11.0 3.79e-02 - 1.00e+00 6.25e-02f 5

Number of Iterations....: 20

(scaled) (unscaled)

Objective...............: -4.4723605258672342e+02 -4.4723605258672342e+02

Dual infeasibility......: 4.2768567429715201e-02 4.2768567429715201e-02

Constraint violation....: 0.0000000000000000e+00 0.0000000000000000e+00

Complementarity.........: 1.0056120563555069e-11 1.0056120563555069e-11

Overall NLP error.......: 4.2768567429715201e-02 4.2768567429715201e-02

Number of objective function evaluations = 92

Number of objective gradient evaluations = 21

Number of equality constraint evaluations = 0

Number of inequality constraint evaluations = 0

Number of equality constraint Jacobian evaluations = 0

Number of inequality constraint Jacobian evaluations = 0

Number of Lagrangian Hessian evaluations = 0

Total CPU secs in IPOPT (w/o function evaluations) = 0.213

Total CPU secs in NLP function evaluations = 1.429

EXIT: Maximum Number of Iterations Exceeded.

[2020-06-24 17:51:11] [INF] [0:139634512226048/dweindl-ThinkPad-L480] [o0i21] Optimizer status 1, final llh: -4.472361e+02, time: wall: 1.451638 cpu: 2.876838.

[2020-06-24 17:51:13] [INF] [0:139634931677120/dweindl-ThinkPad-L480] Walltime on master: 3.306761s, CPU time of all processes: 13.315272s

[2020-06-24 17:51:13] [INF] [0:139634931677120/dweindl-ThinkPad-L480] Sent termination signal to workers.

Analyze Results¶

[7]:

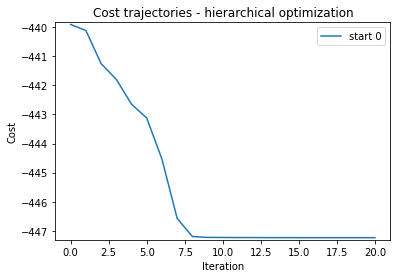

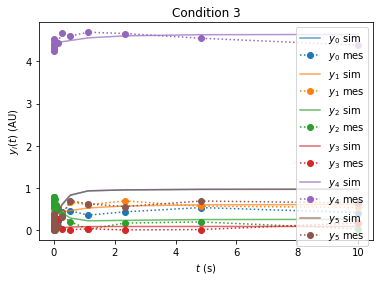

trajectories_hierarchical = parpe.getCostTrajectories(hdf5_pe_output_file_hierarchical)

ax = parpe.plotting.plotCostTrajectory(trajectories_hierarchical, scaleToIteration=0, log=False);

ax.set_title('Cost trajectories - hierarchical optimization')

trajectories_hierarchical

[7]:

array([[-439.90444215],

[-440.11048578],

[-441.25031421],

[-441.7929597 ],

[-442.64439443],

[-443.12253462],

[-444.5376804 ],

[-446.57693735],

[-447.1961445 ],

[-447.22727595],

[-447.22878988],

[-447.23182573],

[-447.23222174],

[-447.23457001],

[-447.23480433],

[-447.23572432],

[-447.23593991],

[-447.23597489],

[-447.23600574],

[-447.23600689],

[-447.23605259]])

[8]:

num_starts = trajectories_hierarchical.shape[1]

for start_idx in range(num_starts):

parpe.compare_optimization_results_to_true_parameters(hdf5_pe_output_file_hierarchical, start_idx=start_idx)

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.30545 -0.69455 -0.69455 p1

1: 0.50000 0.30115 -0.19885 -0.39771 p2

2: 0.40000 0.16319 -0.23681 -0.59202 p3

3: 2.00000 1.81038 -0.18962 -0.09481 p4

4: 0.10000 0.04623 -0.05377 -0.53775 p5

5: 2.00000 0.16235 -1.83765 -0.91882 scaling_x1_common

6: 3.00000 2.99965 -0.00035 -0.00012 offset_x2_batch_0

7: 0.20000 0.13704 -0.06296 -0.31482 x1withsigma_sigma

8: 4.00000 4.00120 0.00120 0.00030 offset_x2_batch_1

Status: 1

Cost: -447.236053 (expected: -0.000000)

[18]:

!rm -f simh.h5

!{example_binary_dir}/example_steadystate_multi_simulator {hdf5_pe_output_file_hierarchical} /inputData {hdf5_pe_output_file_hierarchical} /inputData simh.h5 / --at-optimum --nompi --compute-inner

Running --at-optimum for

conditions from deletemehierarchical/_rank00000.h5:/inputData and

parameters from deletemehierarchical/_rank00000.h5:/inputData

> simh.h5:/

Running for start 0

Starting simulation. Number of conditions: 4

[2020-06-24 17:53:21] [DBG] [-1:140138827208640/] [] HierarchicalOptimizationWrapper parameters: 9 total, 5 numerical, 1 proportionality, 2 offset, 1 sigma

[19]:

(measured, simulated, timepoints, llh) = parpe.readSimulationsFromFile('simh.h5')

start_idx = '0'

[20]:

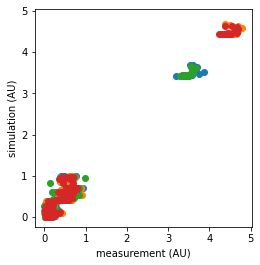

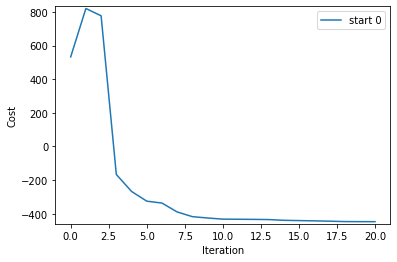

parpe.plotting.plotCorrelation(measured[start_idx], simulated[start_idx]);

[22]:

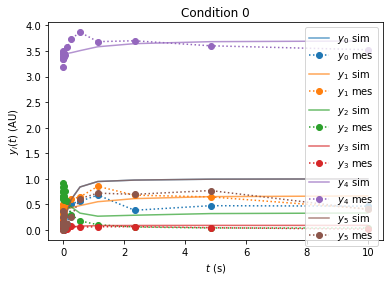

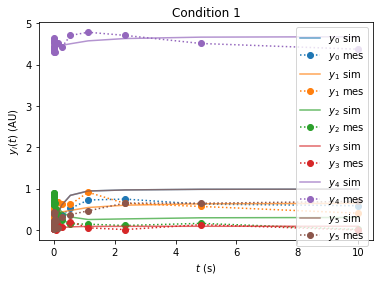

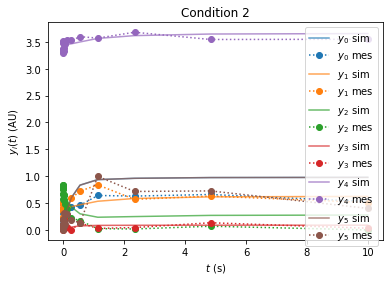

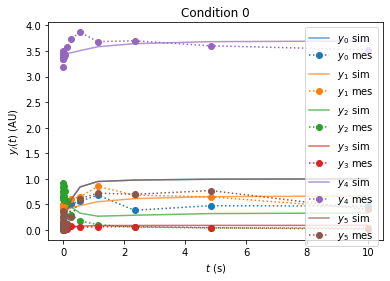

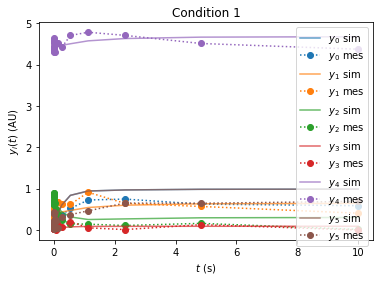

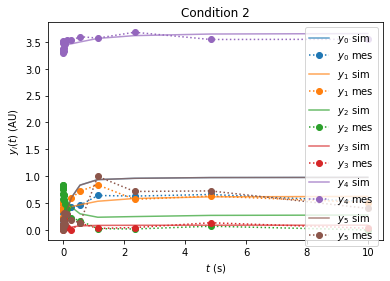

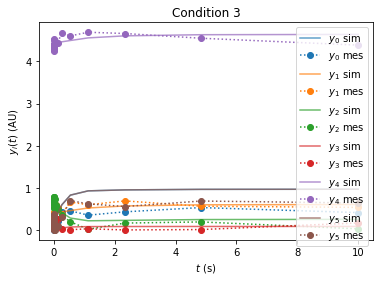

parpe.plotting.plotTrajectoryFits(measured[start_idx], simulated[start_idx], timepoints[start_idx])

Standard optimization Ipopt¶

Set parameter estimation options¶

Parameter estimation settings specified inside an HDF5 file. Those can be changed from any programming language with HDF5 bindings, with hdfview (https://www.hdfgroup.org/downloads/hdfview/), or with a helper script included in parPE, as demonstrated here:

[23]:

# enable derivate checker

input_file = f'{example_data_dir}/example_data.h5'

# Perform two optimizer runs from different starting points

!{optimization_options_py} {input_file} -s numStarts 1

# Disable hierarchical optimization

!{optimization_options_py} {input_file} -s hierarchicalOptimization 0

# Print settings

!{optimization_options_py} {input_file}

hierarchicalOptimization 0

numStarts 1

optimizer 0

retryOptimization 0

ceres/max_num_iterations 100

fmincon/GradObj b'on'

fmincon/MaxFunEvals 10000000.0

fmincon/MaxIter 100

fmincon/TolFun 0

fmincon/TolX 1e-08

fmincon/algorithm b'interior-point'

fmincon/display b'iter'

ipopt/acceptable_iter 1

ipopt/acceptable_obj_change_tol 1e-05

ipopt/acceptable_tol 1e-05

ipopt/hessian_approximation b'limited-memory'

ipopt/limited_memory_update_type b'bfgs'

ipopt/max_iter 20

ipopt/tol 1e-09

ipopt/watchdog_shortened_iter_trigger 0

toms611/mxfcal 100000000.0

Optimize¶

[24]:

# !(cd {parpe_build_root} && exec make -j12) # rebuild

!rm -rf deleteme # delete old result files

# optimize (using a single process)

!PARPE_NO_DEBUG=1 {example_binary_dir}/example_steadystate_multi -o deleteme/ {example_data_dir}/example_data.h5

[2020-06-24 17:54:17] [INF] [-1:140203247966144/] Reading random initial theta 0 from /optimizationOptions/randomStarts

List of user-set options:

Name Value used

acceptable_iter = 1 yes

acceptable_obj_change_tol = 1e-05 yes

acceptable_tol = 1e-05 yes

hessian_approximation = limited-memory yes

limited_memory_update_type = bfgs yes

max_iter = 20 yes

print_level = 5 yes

print_user_options = yes yes

tol = 1e-09 yes

watchdog_shortened_iter_trigger = 0 yes

******************************************************************************

This program contains Ipopt, a library for large-scale nonlinear optimization.

Ipopt is released as open source code under the Eclipse Public License (EPL).

For more information visit http://projects.coin-or.org/Ipopt

******************************************************************************

This is Ipopt version 3.12.12, running with linear solver ma27.

Number of nonzeros in equality constraint Jacobian...: 0

Number of nonzeros in inequality constraint Jacobian.: 0

Number of nonzeros in Lagrangian Hessian.............: 0

Total number of variables............................: 9

variables with only lower bounds: 0

variables with lower and upper bounds: 9

variables with only upper bounds: 0

Total number of equality constraints.................: 0

Total number of inequality constraints...............: 0

inequality constraints with only lower bounds: 0

inequality constraints with lower and upper bounds: 0

inequality constraints with only upper bounds: 0

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i0] iter: 0 cost: 532.303 time_iter: wall: 0.150796s cpu: 0.142117s time_optim: wall: 0.150796s cpu: 0.142117s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

0 5.3230269e+02 0.00e+00 1.00e+02 0.0 0.00e+00 - 0.00e+00 0.00e+00 0

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i1] iter: 1 cost: 820.034 time_iter: wall: 0.0978683s cpu: 0.0851084s time_optim: wall: 0.248665s cpu: 0.227225s

1 8.2003355e+02 0.00e+00 4.00e+01 2.0 5.98e+01 - 9.91e-01 2.66e-02f 2

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i2] iter: 2 cost: 776.643 time_iter: wall: 0.0802227s cpu: 0.0728087s time_optim: wall: 0.328887s cpu: 0.300034s

2 7.7664256e+02 0.00e+00 5.20e+01 2.2 5.80e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i3] iter: 3 cost: -166.319 time_iter: wall: 0.089962s cpu: 0.0772412s time_optim: wall: 0.41885s cpu: 0.377275s

3 -1.6631926e+02 0.00e+00 4.12e+01 1.5 2.34e+00 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i4] iter: 4 cost: -267.208 time_iter: wall: 0.0847308s cpu: 0.0763528s time_optim: wall: 0.503581s cpu: 0.453628s

4 -2.6720761e+02 0.00e+00 3.92e+00 0.2 1.85e-01 - 9.91e-01 1.00e+00f 1

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i5] iter: 5 cost: -325.047 time_iter: wall: 0.0756548s cpu: 0.0673815s time_optim: wall: 0.579236s cpu: 0.521009s

5 -3.2504664e+02 0.00e+00 4.86e+00 -1.2 1.59e-01 - 9.84e-01 1.00e+00f 1

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i6] iter: 6 cost: -336.398 time_iter: wall: 0.0723731s cpu: 0.0639552s time_optim: wall: 0.651609s cpu: 0.584965s

6 -3.3639830e+02 0.00e+00 1.12e+01 -1.9 8.74e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i7] iter: 7 cost: -389.279 time_iter: wall: 0.0873986s cpu: 0.0726503s time_optim: wall: 0.739008s cpu: 0.657615s

7 -3.8927856e+02 0.00e+00 6.91e+00 -1.8 7.08e-01 - 1.00e+00 2.50e-01f 3

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i8] iter: 8 cost: -417.125 time_iter: wall: 0.0832864s cpu: 0.0703492s time_optim: wall: 0.822294s cpu: 0.727964s

8 -4.1712507e+02 0.00e+00 2.72e+00 -2.7 5.18e-01 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i9] iter: 9 cost: -425.124 time_iter: wall: 0.0853009s cpu: 0.072256s time_optim: wall: 0.907595s cpu: 0.80022s

9 -4.2512435e+02 0.00e+00 2.77e+00 -4.1 1.14e-01 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i10] iter: 10 cost: -431.91 time_iter: wall: 0.0697795s cpu: 0.0616751s time_optim: wall: 0.977375s cpu: 0.861895s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

10 -4.3191005e+02 0.00e+00 9.02e-01 -5.5 4.63e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i11] iter: 11 cost: -432.548 time_iter: wall: 0.0834681s cpu: 0.0699167s time_optim: wall: 1.06084s cpu: 0.931812s

11 -4.3254817e+02 0.00e+00 7.07e-01 -7.2 3.94e-02 - 1.00e+00 5.00e-01f 2

[2020-06-24 17:54:18] [INF] [-1:140202953152256/] [o0i12] iter: 12 cost: -433.253 time_iter: wall: 0.0688184s cpu: 0.0608798s time_optim: wall: 1.12966s cpu: 0.992692s

12 -4.3325317e+02 0.00e+00 5.07e-01 -8.8 3.00e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i13] iter: 13 cost: -434.385 time_iter: wall: 0.0718203s cpu: 0.0626103s time_optim: wall: 1.20148s cpu: 1.0553s

13 -4.3438479e+02 0.00e+00 6.25e-01 -10.3 4.40e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i14] iter: 14 cost: -438.759 time_iter: wall: 0.0686035s cpu: 0.0598197s time_optim: wall: 1.27009s cpu: 1.11512s

14 -4.3875867e+02 0.00e+00 3.98e+00 -11.0 5.21e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i15] iter: 15 cost: -440.48 time_iter: wall: 0.0965021s cpu: 0.0791499s time_optim: wall: 1.36659s cpu: 1.19427s

15 -4.4047967e+02 0.00e+00 4.39e+00 -11.0 2.61e+00 - 1.00e+00 6.22e-02f 5

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i16] iter: 16 cost: -442.029 time_iter: wall: 0.0895043s cpu: 0.0736639s time_optim: wall: 1.45609s cpu: 1.26794s

16 -4.4202943e+02 0.00e+00 2.94e+00 -11.0 4.38e-01 - 1.00e+00 1.25e-01f 4

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i17] iter: 17 cost: -443.967 time_iter: wall: 0.0692997s cpu: 0.0606437s time_optim: wall: 1.52539s cpu: 1.32858s

17 -4.4396683e+02 0.00e+00 1.78e+00 -11.0 1.93e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i18] iter: 18 cost: -446.323 time_iter: wall: 0.0702509s cpu: 0.0613218s time_optim: wall: 1.59564s cpu: 1.3899s

18 -4.4632309e+02 0.00e+00 1.06e+00 -11.0 9.67e-02 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i19] iter: 19 cost: -446.807 time_iter: wall: 0.0695901s cpu: 0.0606455s time_optim: wall: 1.66523s cpu: 1.45055s

19 -4.4680666e+02 0.00e+00 7.12e-01 -11.0 1.64e-01 - 1.00e+00 1.00e+00f 1

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i20] iter: 20 cost: -446.911 time_iter: wall: 0.0982874s cpu: 0.0793654s time_optim: wall: 1.76352s cpu: 1.52991s

iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls

20 -4.4691082e+02 0.00e+00 5.78e-01 -11.0 5.12e-01 - 1.00e+00 3.12e-02f 6

Number of Iterations....: 20

(scaled) (unscaled)

Objective...............: -1.0020280299723407e+01 -4.4691081555274133e+02

Dual infeasibility......: 5.7791560739777803e-01 2.5775400258007569e+01

Constraint violation....: 0.0000000000000000e+00 0.0000000000000000e+00

Complementarity.........: 1.0893437113616458e-11 4.8585415966389838e-10

Overall NLP error.......: 5.7791560739777803e-01 2.5775400258007569e+01

Number of objective function evaluations = 77

Number of objective gradient evaluations = 21

Number of equality constraint evaluations = 0

Number of inequality constraint evaluations = 0

Number of equality constraint Jacobian evaluations = 0

Number of inequality constraint Jacobian evaluations = 0

Number of Lagrangian Hessian evaluations = 0

Total CPU secs in IPOPT (w/o function evaluations) = 0.578

Total CPU secs in NLP function evaluations = 1.536

EXIT: Maximum Number of Iterations Exceeded.

[2020-06-24 17:54:19] [INF] [-1:140202953152256/] [o0i21] Optimizer status 1, final llh: -4.469108e+02, time: wall: 1.764039 cpu: 1.529912.

[2020-06-24 17:54:20] [INF] [-1:140203247966144/] Walltime on master: 3.006277s, CPU time of all processes: 2.549641s

Analyze results¶

A good start for checking the results is a look at optimizer trajectories. This can be easily done using the parPE Python package:

[25]:

hdf5_pe_output_file_standard = 'deleteme/_rank00000.h5'

trajectories_standard = parpe.getCostTrajectories(hdf5_pe_output_file_standard)

parpe.plotting.plotCostTrajectory(trajectories_standard, log=False);

Since this example uses artificial data based on model simulations with known parameters, we can compare them to the optimization results:

[26]:

parpe.compare_optimization_results_to_true_parameters(hdf5_pe_output_file_standard, start_idx='0')

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.27496 -0.72504 -0.72504 p1

1: 0.50000 0.30148 -0.19852 -0.39704 p2

2: 0.40000 0.14082 -0.25918 -0.64794 p3

3: 2.00000 1.80128 -0.19872 -0.09936 p4

4: 0.10000 0.04838 -0.05162 -0.51620 p5

5: 2.00000 0.17859 -1.82141 -0.91070 scaling_x1_common

6: 3.00000 3.00079 0.00079 0.00026 offset_x2_batch_0

7: 0.20000 0.13613 -0.06387 -0.31935 x1withsigma_sigma

8: 4.00000 4.00615 0.00615 0.00154 offset_x2_batch_1

Status: 1

Cost: -446.910816 (expected: -0.000000)

[30]:

# Simulate with optimal parameters, save results

!rm -f sim.h5 # remove files from previous run

!{example_binary_dir}/example_steadystate_multi_simulator {hdf5_pe_output_file_standard} /inputData {hdf5_pe_output_file_standard} /inputData sim.h5 / --at-optimum --nompi --nocompute-inner

Running --at-optimum for

conditions from deleteme/_rank00000.h5:/inputData and

parameters from deleteme/_rank00000.h5:/inputData

> sim.h5:/

Running for start 0

Starting simulation. Number of conditions: 4

[31]:

# Load simulated outputs

(measured, simulated, timepoints, llh) = parpe.readSimulationsFromFile('simh.h5')

start_idx = '0'

[32]:

# Plot correlation of measurements (training data) and model simulation with optimized parameters

parpe.plotting.plotCorrelation(measured[start_idx], simulated[start_idx]);

[33]:

# Plot measurement trajectories (training data) and compare to model simulation with optimized parameters

parpe.plotting.plotTrajectoryFits(measured[start_idx], simulated[start_idx], timepoints[start_idx])

Standard optimization vs. hierarchical optimization¶

[34]:

# Accuracy of parameter estimates

start_idx = '0'

print('Hierarchical:')

parpe.compare_optimization_results_to_true_parameters(hdf5_pe_output_file_hierarchical, start_idx=start_idx)

print()

print('Standard:')

parpe.compare_optimization_results_to_true_parameters(hdf5_pe_output_file_standard, start_idx=start_idx)

Hierarchical:

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.30545 -0.69455 -0.69455 p1

1: 0.50000 0.30115 -0.19885 -0.39771 p2

2: 0.40000 0.16319 -0.23681 -0.59202 p3

3: 2.00000 1.81038 -0.18962 -0.09481 p4

4: 0.10000 0.04623 -0.05377 -0.53775 p5

5: 2.00000 0.16235 -1.83765 -0.91882 scaling_x1_common

6: 3.00000 2.99965 -0.00035 -0.00012 offset_x2_batch_0

7: 0.20000 0.13704 -0.06296 -0.31482 x1withsigma_sigma

8: 4.00000 4.00120 0.00120 0.00030 offset_x2_batch_1

Status: 1

Cost: -447.236053 (expected: -0.000000)

Standard:

# __Exp____ __Act______ __Err______ __RelErr___ __ID_______

0: 1.00000 0.27496 -0.72504 -0.72504 p1

1: 0.50000 0.30148 -0.19852 -0.39704 p2

2: 0.40000 0.14082 -0.25918 -0.64794 p3

3: 2.00000 1.80128 -0.19872 -0.09936 p4

4: 0.10000 0.04838 -0.05162 -0.51620 p5

5: 2.00000 0.17859 -1.82141 -0.91070 scaling_x1_common

6: 3.00000 3.00079 0.00079 0.00026 offset_x2_batch_0

7: 0.20000 0.13613 -0.06387 -0.31935 x1withsigma_sigma

8: 4.00000 4.00615 0.00615 0.00154 offset_x2_batch_1

Status: 1

Cost: -446.910816 (expected: -0.000000)

The previous block shows, that parameters are recovered more accurately by the hierarchical optimization approach.

[35]:

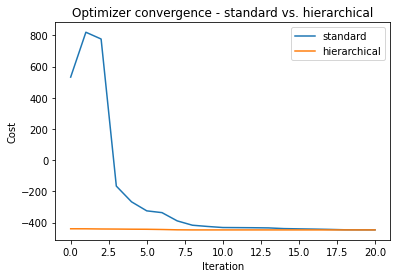

ax = parpe.plotting.plotCostTrajectory(trajectories_standard, log=False)

parpe.plotting.plotCostTrajectory(trajectories_hierarchical, log=False, ax=ax)

ax.autoscale(True)

ax.legend(['standard', 'hierarchical'])

ax.set_title('Optimizer convergence - standard vs. hierarchical');

Although it does not make a great difference for this tiny example, we can see, that the hierarchical approach convergences faster.

[36]:

# Show best final objective for the two approaches (smaller means better):

print('Best objective standard:', np.min(trajectories_standard))

print('Best objective hierarchical:', np.min(trajectories_hierarchical))

Best objective standard: -446.9108155527413

Best objective hierarchical: -447.2360525867234